PyTorch

Integrating with PyTorch

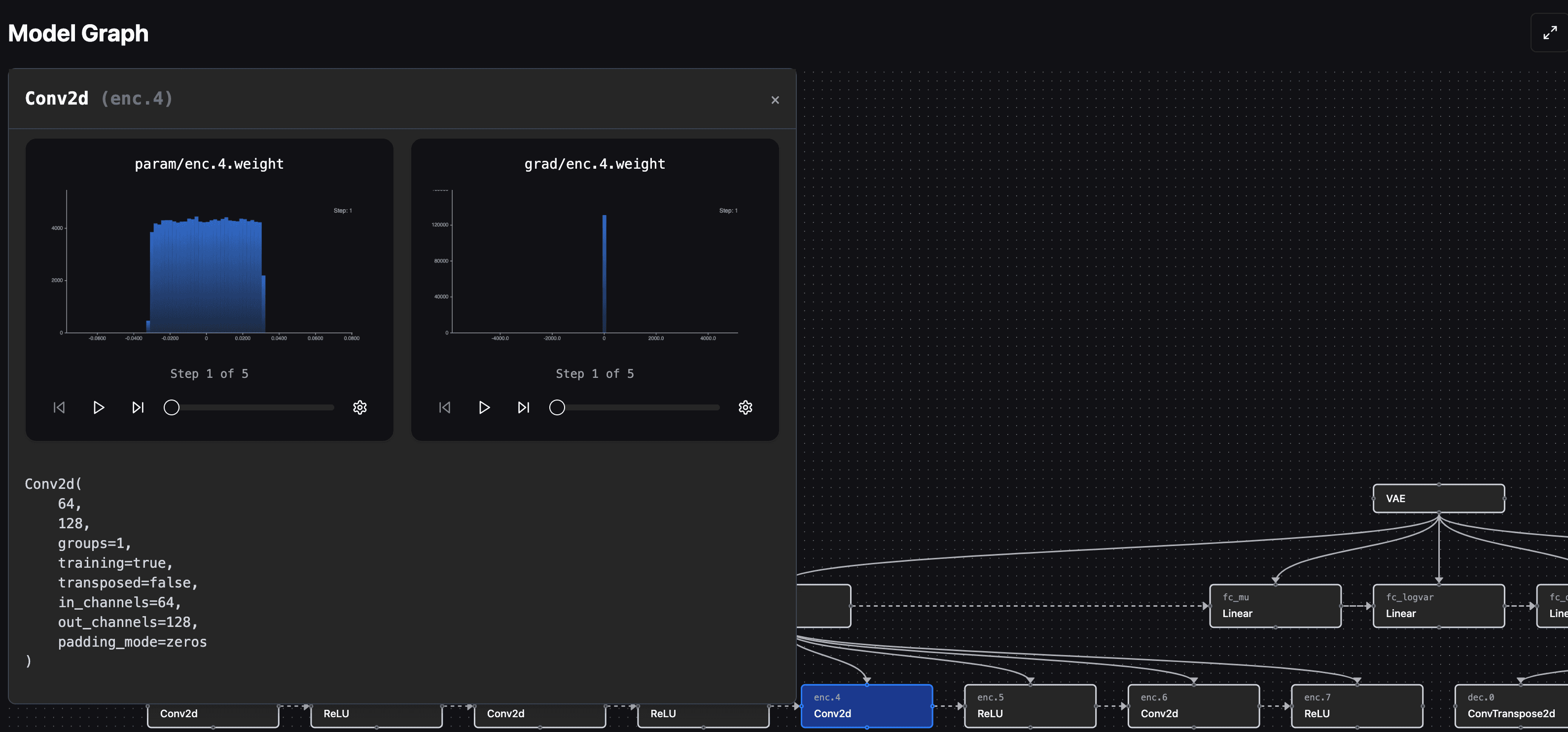

mlop provides best-in-class support for PyTorch, including automatic logging of model parameters, gradients, graphing out model structure, data flows, as well as code profiling.

Migrating from Weights & Biases

See the Migrating from Weights & Biases guide for a quickstart.

Logging Model Details

Currently, mlop supports directly invoking mlop.watch() on a PyTorch model to log model details. After initializing the logger, you can equivalently use run.watch() on the run object to collect evolution of the model graph.

Source

See the Python code for more details.

As training progresses, histograms are created for all flattened gradients and parameters in the model. You may optionally specify the logging frequency and resolution of the histograms by setting freq and bins to the mlop.watch() function.

Example

This provides you with nice visualization as soon as the model training starts,